In today’s world of digitalization, the amount of data is ever-growing. Some information can be extremely valuable, while others suffer from a lack of quality control. Every business needs quality data since, with its help, it becomes possible to offer a decent product to a customer. That’s why it is essential to provide quality data management.

Quality data management leads to better decision-making and increases the efficiency of all data-driven processes. The results of your work will also improve, and you will have overall more control over possible issues. It is known that low-quality data is the reason for many projects’ failure, even if they were in demand on the market at the beginning. The main issue is that low-quality data negatively affects productivity and the natural flow of work processes.

Data quality management (DQM) lifts an organization’s performance and establishes the company’s future. Inoxoft is a company you should consider if you are interested in these principles. Our guide will tell you everything you need to know about DQM best practices and importance. If you are ready, let’s get started!

- What Is Data Quality Management?

- What Is Data Quality Control?

- Why to use data quality management?

- Why You Need DQM for Your Business?

- Improved business operations

- Resource efficiency

- Competitive edge

- Targeted marketing success

- What Are The Components of Data Quality Management?

- Organizational structure

- Data quality definition

- Data profiling audits

- Data reporting and monitoring

- Correcting errors

- Elements of Data Quality Management

- Importance of DQM in Big Data

- Data Quality Timing and Placement

- DQM Metrics

- What Are Data Quality Management Tools?

- Data Quality Management Best Practices

- Making data quality a priority

- Automating data entry

- Preventing duplicates, not just curing them

- Taking care of both master and metadata

- Top 3 Sources of Low-Quality Data

- Source 1: Mergers and acquisitions

- Source 2: Transitions from legacy systems

- Source 3: User error

- Consider Inoxoft Your Trusted Partner

What Is Data Quality Management?

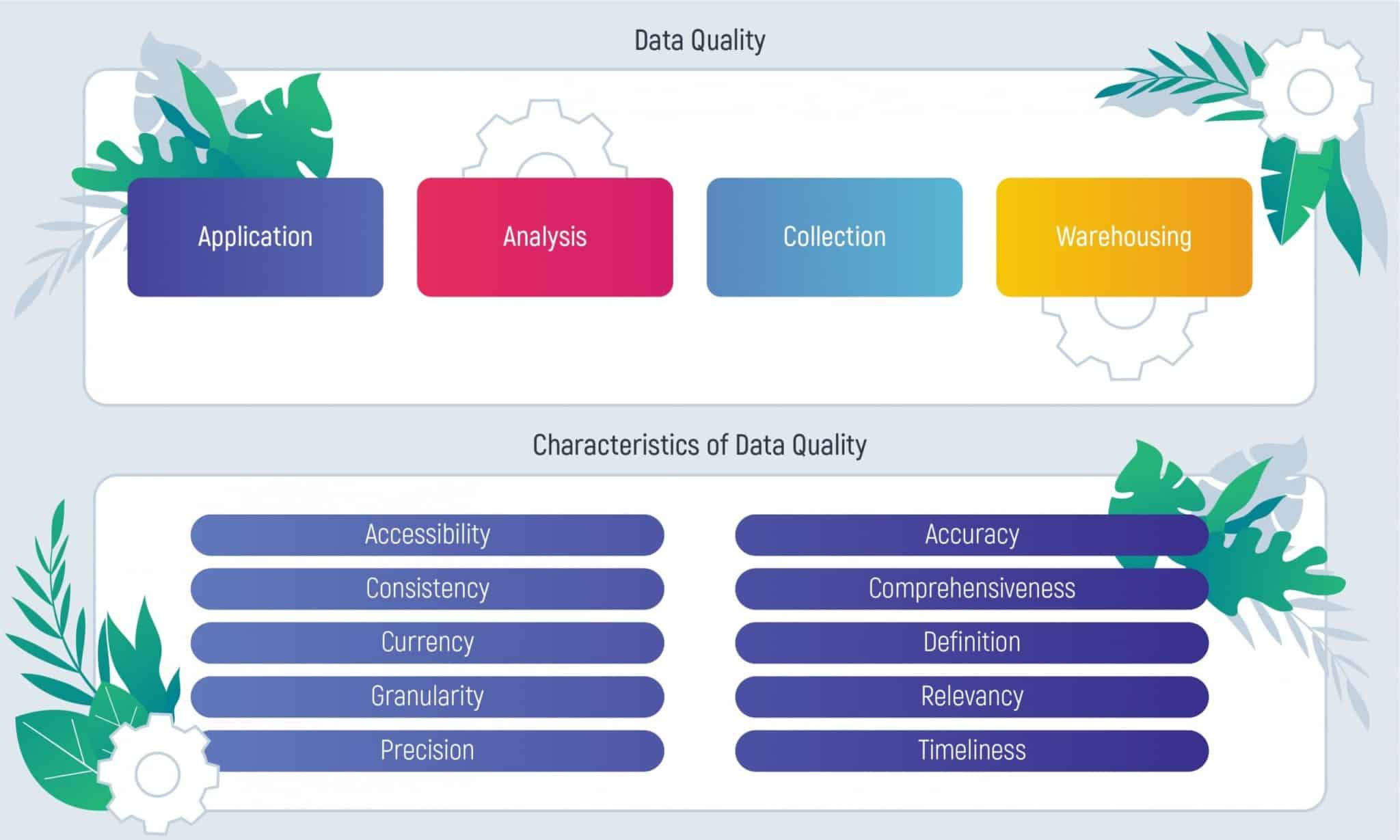

The first and foremost question is: what is data quality management? Put simply, it is a number of tools which are responsible for maintaining high-quality information. They improve the data that is used for analysis and estimation. These tools are a need for data analysis, as it is the basis of any work process. Quality of the data is also known as “health of the data”. It’s important to remember that even if the data is slightly outdated, it can be damaging in the long run.

Efficiency and quality of data management are defined by how it meets the needs of a user. One more thing about high-quality data is that it is very flexible and can be easily processed. At the same time, everyone can have his own view on what to consider to be high-quality data, therefore there are data quality dimensions. These are measurable categories that define the quality of the data.

What Is Data Quality Control?

Data quality control is an organization’s systematic approach to quality data management. It preserves data accuracy, consistency, completeness, and reliability across its entire lifecycle. The process has two phases: pre-assurance and post-assurance.

In the first phase, data is subjected to screening to ensure it adheres to established quality standards. Access to this data is restricted to authorized personnel, enhancing security and quality control.

The second phase involves the detection and correction of data inconsistencies. It’s achievable by using statistical metrics to assess data quality. Actions in this phase may include data blocking, correction, continuous monitoring, and governance.

Why to use data quality management?

The system is the best way to sort out data and make it beneficial for users: with its help, you can easily find errors and solve them in the process.

As the data is the basis of all the processes, data quality issue management makes all the core operations function quickly and efficiently. As a result, you have a better functioning business. If your data is not effective, your finances are not used wisely. DQM saves your costs and prevents you from wasting your resources. You also need to remember that staying relevant in the market is really important. Quality data provides you with competitiveness that is required to be in demand among other companies. As you can see, incorporating this process into your business is highly beneficial for you.

Why You Need DQM for Your Business?

Data abundance poses a challenge called a data crisis. Large volumes of data are plagued by low quality, making it difficult for businesses to extract valuable insights or use them effectively.

DQM, with its data quality management model, has emerged as a pivotal solution to this problem. It aims to assist organizations in pinpointing and remedying data errors. DQM ensures that the data in their systems remains accurate and well-suited for its intended use. Let’s explore four compelling reasons your business should prioritize data quality management.

Improved business operations

Properly managed data, with the assistance of data quality management services, accelerates and streamlines all fundamental business operations. High-quality data enhances decision-making at every management and operations level, contributing to smoother and more efficient business processes.

Resource efficiency

Inefficient resource utilization, including financial resources, often results from low-quality data within an organization. Implementing DQM practices, along with quality management & data processing, saves businesses from resource waste, ultimately leading to more significant and favorable outcomes.

Competitive edge

A business with a strong reputation gains a competitive advantage. Managing data quality and high-quality data ensure that a business maintains a positive reputation, as low-quality data may erode customer trust and satisfaction, affecting the perception of a business’s products and services.

Targeted marketing success

Creating marketing campaigns based on inaccurate data or leads sourced from poor-quality data is unproductive. According to the data quality management definition, accurate customer data facilitates better campaign targeting, increasing conversion rates, and more effective outreach efforts.

What Are The Components of Data Quality Management?

It goes without saying that there are many components of data management quality. Each of them has its roles and responsibilities. These basic principles help you execute all the necessary processes and basic data operations. Here are the main quality data management components you need to know.

Organizational structure

To implement new strategy strategy into your business, it’s extremely important to give members of your team specific roles. You should have DQM Program Manager, who is responsible for keeping the data in check; Organization Change Manager, who decides on main data infrastructure decisions; Business Analyst, who reports on data and Data steward, who manages data as a corporate asset.

Data quality definition

This aspect is important to understand what you expect from data. Basically, it’s the level of quality you choose for data. Everyone understands the term “quality” differently, but there are basic things your data should include: integrity, completeness, validity, uniqueness, accuracy, consistency, accountability, transparency, protection and compliance.

Data profiling audits

Put simply, data profiling is the process that ensures the quality of data. Auditors validate data according to set requirements. The final step for them is to report on the data quality. If done regularly, it really influences the data quality positively.

Data reporting and monitoring

The main task of this function is to check all the errors that may occur in the data quality. It is highly important to capture all the mistakes before you use data, as it can cause problems in the long run.

Correcting errors

The last component of quality data management is to solve all the issues that occurred in the process. Once reporting and monitoring help you find the mistake, it becomes possible to fix errors, complete the data and remove all the duplicates you find.

Elements of Data Quality Management

Quality data management, an integral part of an effective data quality management system, includes data cleansing and profiling that enhance organizational data’s reliability and trustworthiness. These key elements ensure that data is accurate, consistent, and fit for various analytical and operational purposes.

Data cleansing involves correcting unknown or incorrect data types. It ensures that each data element is accurately categorized and establishes data hierarchies.

Data profiling processes diligently identify and remove duplicates, thus enhancing the overall integrity of the dataset. Duplicate entries can lead to skewed analyses and yield inaccurate insights.

Importance of DQM in Big Data

The surge in streaming and shipment tracking data creates challenges. Repurposing data across contexts creates meaning variations, raising validity and consistency questions. Various data quality management examples show that solid data quality is vital for understanding diverse big data sets.

Validating externally sourced data in big data is challenging due to the lack of built-in validation controls, risking inconsistencies. Balancing oversight and data quality is essential. Data rejuvenation extends historical data’s life but demands validation and governance. Extracting insights from old data requires proper integration into newer sets. It is crucial for preserving historical data integrity.

Data Quality Timing and Placement

DQM’s timing and application depend on real-world contexts. For urgent processes like credit card transactions, real-time data accuracy is vital to identify fraud promptly, benefiting customers and businesses.

Conversely, tasks like loyalty card updates can utilize overnight processing, maintaining data quality without immediate demand. These instances highlight the need for aligning data quality with specific task requirements and prioritizing efficiency and responsiveness to customer needs.

DQM Metrics

It is essential to have some measurements for the data quality management model. Metrics for corporate data quality management should be clearly defined and be able to measure all the data features. Here are some of the main data quality management metrics.

- Accuracy. It’s the extent to which data fits the requirements. Quality data should reflect the described object or event.

- Completeness. The data is considered to be complete if it is enough to fulfill all the demands.

- Consistency. Data values should not contradict each other, only then such data can be considered to be consistent.

- Integrity. The data should be tested to make sure that it complies with the organization’s procedures.

- Timeliness. The final metric is responsible for keeping the data available for clients all around the world, so they could have it any time of the day without problems.

- Data storage expenses. When your organization spends increasing budgets on storing data without getting more value from it or using the same or lower amounts of information, it usually indicates low data quality. And on the contrary, if your data storage expenses decrease or stay at the same level while you get more insights, it is strong proof of improved data quality.

- Data-to-error ratio. This data metric enables organizations to gauge the number of known errors within a dataset in relation to the dataset’s actual size. It provides essential insights into data quality and reliability.

- Data time-to-insight. This metric assesses the time needed to extract valuable insights from data. It is critical for evaluating data efficiency and its impact on decision-making processes.

- Empty value count. This metric quantifies the frequency of empty fields within a dataset. These occurrences often indicate data misplacement or missing information, shedding light on data quality.

- Data transformation error rate. This metric tracks the frequency of failures in data transformation operations. It provides insights into the reliability of data processing and transformation procedures.

What Are Data Quality Management Tools?

Techniques or tools control the quality of data. The main features of operational tools are matching, profiling, metadata management, monitoring, etc. There are three main techniques that are used by many companies. Here is some information about them.

The first tool is IBM InfoSphere Information Server for Data Quality. It is convenient because it can monitor data automatically. It catches all the data errors and fixes them according to customers’ needs. The second tool is Informatica Data Quality. It uses a machine learning approach to manage the data quality. Moreover, this technique is very flexible regardless of data type or workloads. And the last, but not least, is Trillium DQ. It mostly provides batch data quality, however, it deals with big data as well.

Data Quality Management Best Practices

We have already learned that data quality is crucial while working on your project: that’s why it’s important to know examples and best practices. Here are some of them that will help you keep your data in check.

Making data quality a priority

The first step is to make data quality a priority and be sure that every employee is engaged in the process as well. However, many preparational steps should be taken: designing an enterprise-wide data strategy, creating clear user roles with accountability and rights, having a dashboard for monitoring, etc.

Automating data entry

Data entry processes should be automated: this way, the chance to make an error is reduced to a minimum. If you can incorporate automated processes, do it without a doubt: it will only improve data quality.

Preventing duplicates, not just curing them

Every time you notice a duplicate, try to clean it immediately. If you prevent their occurrence, it will be much easier to execute all the processes later.

Taking care of both master and metadata

While master data is always taken care of, you should always keep in mind your metadata. It provides all the basic reveals without which you can’t do more advanced tasks.

Top 3 Sources of Low-Quality Data

High-quality data is the bedrock of informed choices, effective operations, and maintaining customer trust. However, the data landscape has its challenges, and one of the most pressing issues is the presence of low-quality data. To tackle this issue effectively, it is essential to comprehend the sources of fair data.

Now we delve into the three primary sources of low-quality data, shedding light on where and how data quality can be compromised. By identifying these sources, organizations can proactively enhance data quality, ultimately ensuring that their data is a reliable and valuable asset rather than a liability.

Source 1: Mergers and acquisitions

When two companies merge, their data systems may clash. Different data systems, legacy databases, and varied data collection methods can create discrepancies. Resolving conflicts can be complex, frequently requiring the establishment of intricate winner-loser matrices. Sometimes, the situation becomes so convoluted that clarity is lost, leading to conflicts between programmers and business analysts.

In anticipation of a merger or acquisition, involve IT leadership to proactively address these issues before finalizing any deals.

Source 2: Transitions from legacy systems

Companies may use old “legacy systems” for their databases. So, transitioning poses challenges due to the distinct components of data systems: the database, business rules, and user interface. Neglecting the differences in business rule layers during data conversion can result in inaccuracies.

Ensure your transition team is well-versed in legacy and new systems to facilitate a smooth transition.

Source 3: User error

Human involvement in data entry ensures that user errors will persist. Mistakes like typos are inevitable, even among data-cleansing experts.

Simplify and optimize the forms your company uses for data entry to reduce the potential for user error. However, no efforts will eliminate human error, so automatic or even manual data validations are essential.

Consider Inoxoft Your Trusted Partner

Inoxoft is a software development company that provides top-notch services for people all around the world. Our company will help you manage your data and keep it on a high level. Our dedicated team will make sure all your business needs are met.

Inoxoft provides QA automation testing services, IT system integration services, UI and UX development. We will also help you learn everything about the key features of quality data management.

Our company’s top priority is to guarantee our client’s safety and personalized experience. Contact us if you want to know everything about data quality management!

Frequently Asked Questions

Why is data quality important?

If the data quality is low, it can be harmful for the company and can cause many errors in the process. That's why managing data quality is essential.

How do you manage data quality issues?

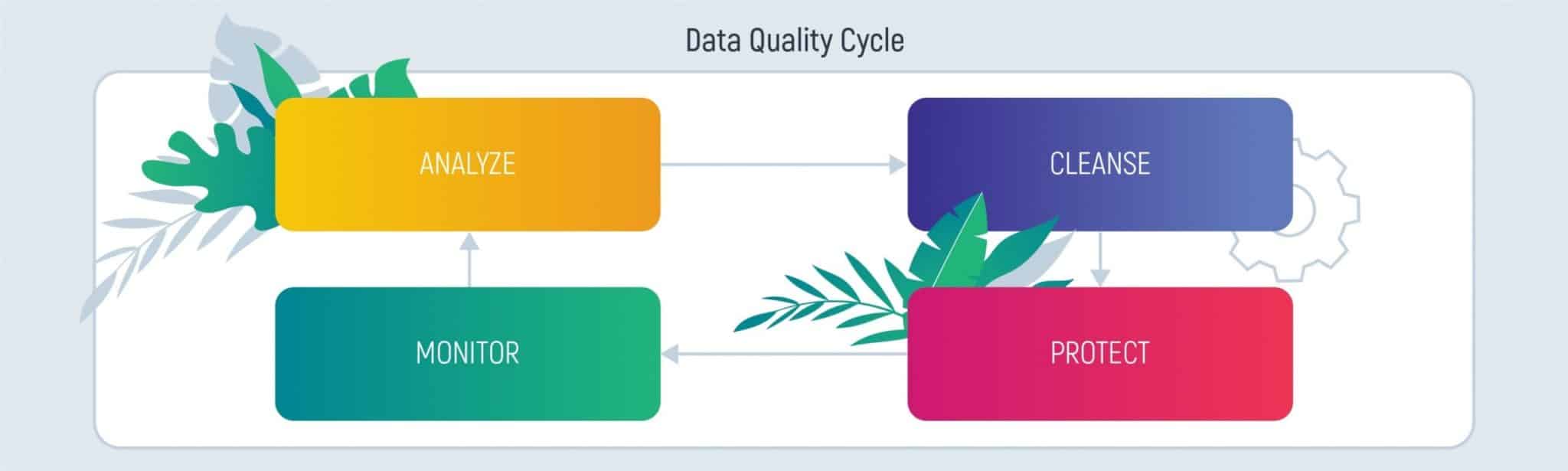

To keep data quality in check, you need to do 4 main steps: cleanse, protect, monitor and analyze. Read the article if you are interested in quality data management best practices!

How does data management increase efficiency?

Data quality is a base for properly executed processes. With the help of it all the core operations function quickly and effectively. If something is wrong with data quality, it negatively influences the business.